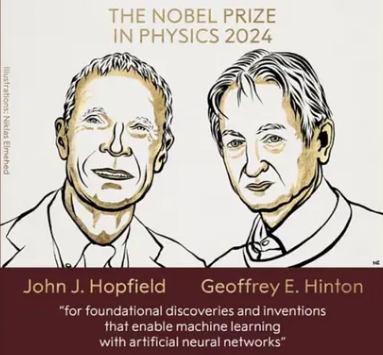

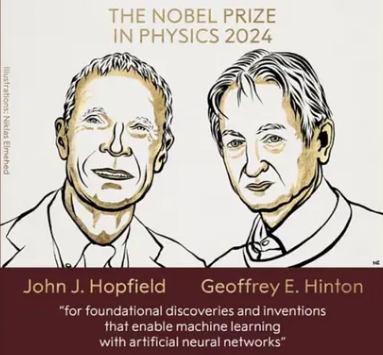

Nobel Prize in Physics 2024: Groundbreaking Advances in AI and Machine Learning (GS Paper 3, Science & Technology)

Why in News?

- The 2024 Nobel Prize in Physics has been awarded to John Hopfield and Geoffrey Hinton for their transformative contributions to artificial intelligence (AI), particularly in the fields of machine learning and artificial neural networks.

- Their pioneering research, initiated in the 1980s, has fundamentally reshaped how machines learn from data, leading to the widespread AI applications we see today.

- This recognition highlights the intersection of physics, neuroscience, and computer science, emphasizing the profound impact of their work on modern technology.

What is Machine Learning (ML)?

About: Machine learning is a subset of artificial intelligence that empowers computers to learn from data, identify patterns, and make decisions without being explicitly programmed for each specific task. Instead of relying on hard-coded rules, machine learning algorithms analyze large datasets to detect trends and make predictions. The essence of ML lies in its ability to improve performance over time as it is exposed to more data.

Applications of Machine Learning:

- Image and Speech Recognition: Machine learning algorithms enable technologies like facial recognition and voice-activated assistants to understand and interpret human input effectively.

- Recommendation Systems: Streaming services and online retailers use ML to analyze user behavior, providing personalized recommendations that enhance user experience.

- Fraud Detection: Financial institutions implement ML models to identify unusual patterns and flag potentially fraudulent transactions, safeguarding user accounts.

- Healthcare Diagnostics: Machine learning aids in analyzing medical data, improving diagnostic accuracy and assisting in early disease detection.

- Autonomous Vehicles: ML technologies are critical in enabling self-driving cars to navigate complex environments by interpreting sensor data and making real-time decisions.

What is Deep Learning (DL)?

About: Deep learning is a specialized branch of machine learning that focuses on artificial neural networks with multiple layers (hence the term "deep"). These networks mimic the structure and function of the human brain, enabling them to learn complex patterns from vast amounts of unstructured data. Deep learning has revolutionized many fields by allowing computers to perform tasks that were once thought to require human intelligence.

Key Applications of Deep Learning:

- Image and Speech Recognition: Deep learning powers advanced applications such as face detection and virtual assistants, enabling machines to understand and respond to human interactions.

- Autonomous Vehicles: Self-driving cars rely heavily on deep learning to process data from cameras and sensors, making split-second decisions for safe navigation.

- Natural Language Processing (NLP): Deep learning enhances language translation services and chatbots, improving human-computer interaction and communication.

- Medical Diagnostics: By analyzing medical images, deep learning models assist in diagnosing conditions like cancer, facilitating quicker and more accurate treatments.

ML vs. DL

While both machine learning and deep learning involve training algorithms to recognize patterns, they differ significantly in complexity and methodology:

- Machine Learning: Often requires structured data and human intervention for feature extraction. Traditional ML algorithms might perform well with smaller datasets and simpler tasks.

- Deep Learning: Automates feature discovery through its multi-layered neural networks, making it more powerful for complex tasks that involve large volumes of unstructured data. It excels in scenarios where traditional ML may struggle, particularly in image and speech recognition.

What is an Artificial Neural Network (ANN)?

About: Artificial neural networks are computational models inspired by the human brain's structure and functioning. They consist of interconnected nodes (or neurons) organized in layers, designed to process data and learn from it. ANNs can adapt their internal structures based on the information they receive, allowing them to improve their performance over time.

Key Features of ANNs:

- Structure: ANNs consist of an input layer, one or more hidden layers, and an output layer. Each node applies an activation function to its inputs, transforming the data as it passes through the network.

- Learning: Using algorithms like backpropagation, ANNs can learn from mistakes by adjusting the weights of connections based on the errors observed during training.

- Output: The final layer generates predictions or classifications based on the input data, which can be in the form of probabilities or numerical values.

Applications of ANNs:

- Image and Video Recognition: ANNs are widely used in facial recognition systems and video analysis, enabling machines to identify and classify visual data effectively.

- Speech Recognition: Technologies like Siri and Alexa utilize ANNs to interpret voice commands and deliver accurate responses.

- Natural Language Processing: ANNs support applications in language translation and sentiment analysis, making it easier to understand and generate human language.

- Medical Diagnostics: ANNs assist in detecting diseases from medical imaging, providing valuable support to healthcare professionals.

- Autonomous Vehicles: ANNs help self-driving cars interpret sensor data and make navigation decisions in real time.

Works of Nobel Prize Winners

John Hopfield:

- Mimicking the Brain with Neural Networks John Hopfield's significant contribution lies in developing artificial neural networks that replicate brain functions related to memory and learning.

- His innovative Hopfield network processes information as a whole rather than as discrete bits, allowing it to recognize patterns even from incomplete or noisy data.

- This holistic approach enables the regeneration of images or sounds from partial inputs, paving the way for advancements in technologies like facial recognition and image enhancement.

- Hopfield’s work drew inspiration from neuroscience, particularly the research of Donald Hebb, who explored the mechanisms of learning and synaptic connections in the brain.

Geoffrey Hinton:

- Deep Learning and Advanced Neural Networks Geoffrey Hinton expanded upon Hopfield’s foundational ideas by developing deep neural networks that can handle complex tasks such as voice and image recognition.

- His introduction of the backpropagation algorithm allowed these networks to learn efficiently from large datasets. Backpropagation enables the network to minimize prediction errors by adjusting the weights of connections between nodes.

- Hinton's groundbreaking work was particularly notable during the 2012 ImageNet Visual Recognition Challenge, where his team’s algorithm significantly outperformed previous models in image classification, showcasing the immense potential of deep learning.

- His contributions have had far-reaching implications across various fields, including astronomy, where machine learning aids in analyzing vast datasets.

Conclusion

- The groundbreaking contributions of John Hopfield and Geoffrey Hinton to the fields of AI and machine learning have fundamentally transformed technology.

- By bridging the disciplines of neuroscience, physics, and computer science, they have paved the way for modern AI applications that impact daily life and various industries.

- Their achievements, recognized by the 2024 Nobel Prize in Physics, underscore the importance of interdisciplinary research in fostering innovation.

- As AI continues to evolve, the foundational work of Hopfield and Hinton will remain central to advancements in machine learning and artificial intelligence, shaping the future of technology and its applications in society.